¶ 1 Overview

This guide describes how to configure and use the Google Speech Recognition (GSR) plugin to the UniMRCP server. The document is intended for users having a certain knowledge of Google Cloud Speech Platform and UniMRCP.

¶ 1.1 Installation

For installation of the packages, use one of the manuals below.

¶ 1.2 Applicable Versions

Instructions provided in this guide are applicable to the following versions.

UniMRCP 1.4.0 and above

UniMRCP GSR Plugin 1.0.0 and above

¶ 2 Supported Features

This is a brief check list of the features currently supported by the UniMRCP server running with the GSR plugin.

¶ 2.1 MRCP Methods

-

DEFINE-GRAMMAR

-

RECOGNIZE

-

START-INPUT-TIMERS

-

STOP

-

SET-PARAMS

-

GET-PARAMS

¶ 2.2 MRCP Events

-

RECOGNITION-COMPLETE

-

START-OF-INPUT

¶ 2.3 MRCP Header Fields

-

Input-Type

-

No-Input-Timeout

-

Recognition-Timeout

-

Speech-Complete-Timeout

-

Speech-Incomplete-Timeout

-

Waveform-URI

-

Media-Type

-

Completion-Cause

-

Confidence-Threshold

-

Start-Input-Timers

-

DTMF-Interdigit-Timeout

-

DTMF-Term-Timeout

-

DTMF-Term-Char

-

Save-Waveform

-

Speech-Language

-

Cancel-If-Queue

-

Sensitivity-Level

¶ 2.4 Grammars

-

Built-in and dynamic speech contexts

-

Built-in/embedded DTMF grammar

-

SRGS XML (limited support)

¶ 2.5 Results

- NLSML

¶ 3 Configuration Format

The configuration file of the GSR plugin is located in /opt/unimrcp/conf/umsgsr.xml. The configuration file is written in XML.

¶ 3.1 Document

The root element of the XML document must be <umsgsr>.

Attributes

| Name | Unit | Description |

|---|---|---|

| license-file | File path | Specifies the license file. File name may include patterns containing '*' sign. If multiple files match the pattern, the most recent one gets used. |

| gapp-credentials-file | File path | Specifies the Google Application Credentials file to use. File name may include patterns containing '*' sign. If multiple files match the pattern, the most recent one gets used. |

Parent

- None.

Children

| Name | Unit | Description |

|---|---|---|

| streaming-recognition | String | Specifies parameters of streaming recognition employed via gRPC. |

| speech-contexts | String | Contains a list of speech contexts. |

| speech-dtmf-input-detector | String | Specifies parameters of the speech and DTMF input detector. |

| utterance-manager | String | Specifies parameters of the utterance manager. |

| rdr-manager | String | Specifies parameters of the Recognition Details Record (RDR) manager. |

| monitoring-agent | String | Specifies parameters of the monitoring manager. |

| license-server | String | Specifies parameters used to connect to the license server. The use of the license server is optional. |

| webook | String | Specifies parameters of the webhook service. |

Example

This is an example of a bare document.

<umsgsr license-file="umsgsr_*.lic" gapp-credentials-file="*.json">

</umsgsr>

¶ 3.2 Streaming Recognition

This element specifies parameters of streaming recognition.

Attributes

| Name | Unit | Description |

|---|---|---|

| language | String | Specifies the default language to use, if not set by the client. For a list of supported languages, visit https://cloud.google.com/speech/docs/languages |

| interim-results | Boolean | Specifies whether to request interim results or not. |

| start-of-input | String | Specifies the source of start of input event sent to the client (use "service-originated" for an event originated based on a first-received interim result and "internal" for an event determined by plugin). Available since GSR 1.6.0. |

| max-alternatives | Integer | Specifies the maximum number of speech recognition result alternatives to be returned. Can be overridden by client by means of the header field N-Best-List-Length. |

| alternatives-below-threshold | Boolean | Specifies whether to return speech recognition result alternatives with the confidence score below the confidence threshold. Available since GSR 1.9.0. |

| confidence-format | String | Specifies the format of the confidence score to be returned (use "auto" for a format based on protocol version, "mrcpv2" for a float value in the range of 0..1, "mrcpv1" for an integer value in the range of 0..100). Available since GSR 1.9.0. |

| single-utterance | Boolean | Specifies whether to detect a single spoken utterance or perform continuous recognition. Available since GSR 1.4.0. |

| results-indent | Integer | Specifies the indentation used to compose NLSML results. |

| skip-unsupported-grammars | Boolean | Specifies whether to skip or raise an error while referencing a malformed or not supported grammar. Available since GSR 1.8.0. |

| skip-empty-results | Boolean | Specifies whether to implicitly initiate a new gRPC request if the current one completes with an empty result. Available since GSR 1.16.0. |

| transcription-grammar | String | Specifies the name of the built-in speech transcription grammar. The grammar can be referenced as builtin:speech/transcribe or builtin:grammar/transcribe, where transcribe is the default value of this parameter. Available since GSR 1.8.0. |

| http-proxy | String | Specifies the URI of HTTP proxy, if used. Available since GSR 1.12.0. |

| profanity-filter | Boolean | Specifies whether to attempt to filter out profanities. Available since GSR 1.14.0. |

| word-time-offsets | Boolean | Specifies whether to return word-level time offset information. Available since GSR 1.14.0. |

| auto-punctuation | Boolean | Specifies whether to enable automatic punctuation. Available since GSR 1.14.0. |

| use-enhanced | Boolean | Specifies whether to use enhanced model for speech recognition. |

| model | String | Specifies the domain-specific model, if used. Find available values at https://cloud.google.com/speech-to-text/docs/reference/rpc/google.cloud.speech.v1#recognitionconfig. Available since GSR 1.14.0. |

| stream-creation-timeout | Time interval [msec] | Specifies how long to wait for gRPC stream creation. If timeout is set 0, no timer is used. Otherwise, if timeout is elapsed, gRPC stream creation is cancelled. Available since GSR 1.15.0. |

| grpc-log-redirection | Boolean | Specifies whether to enable gRPC log redirection. Available since GSR 1.15.0. |

| grpc-log-verbosity | String | Specifies gRPC logging verbosity. One of DEBUG, INFO, ERROR. See GRPC_VERBOSITY for more info. Available since GSR 1.15.0. |

| grpc-log-trace | String | Specifies a comma separated list of tracers producing gRPC logs. Use 'all' to turn all tracers on. See GRPC_TRACE for more info. Available since GSR 1.15.0. |

| inter-result-timeout | Time interval [msec] | Specifies a timeout between interim results containing transcribed speech. If the timeout is elapsed, input is considered complete. The timeout defaults to 0 (disabled). Available since GSR 1.16.0. |

| speaker-diarization | Boolean | Specifies whether to enable speaker diarization. Available since GSR 1.18.0. |

| min-speaker-count | Integer | Specifies the minimum number of speakers in the conversation. Available since GSR 1.18.0. |

| max-speaker-count | Integer | Specifies the maximum number of speakers in the conversation. Available since GSR 1.18.0. |

| tag-format | String | Specifies the format of the instance element to be returned. Use one of:

|

| api | String | Specifies the Speech-to-Text API. Use one of:

|

| alternate-languages | String | Specifies a comma-separated list of alternate languages. Available since GSR 1.19.0 in v1p1beta1 and GSR 1.25.0 in v1. |

| service-uri | String | Specifies the service endpoint and defaults to speech.googleapis.com: 443. Available since GSR 1.20.0. |

| region | String | Specifies the region. The global endpoint is used if the region is not specified. Available since GSR 1.20.0. |

| word-confidence | Boolean | Specifies whether to return word-level confidence. Available since GSR 1.21.0 in v1p1beta1 and GSR 1.25.0 in v1. |

| spoken-punctuation | Boolean | Specifies whether to enable spoken punctuation. Available since GSR 1.21.0 in v1p1beta1 and GSR 1.25.0 in v1. |

| spoken-emojis | Boolean | Specifies whether to enable spoken emojis. Available since GSR 1.21.0 in v1p1beta1 and GSR 1.25.0 in v1. |

| grammar-param-separator | Char | Specifies a seprator of optional parameters passed to a built-in grammar. The separator defaults to ';'. Available since GSR 1.23.0. |

| sort-alternatives | Boolean | Specifies whether to sort speech recognition result alternatives to ensure the order based on confidence score (the highest first). Available since GSR 1.23.0. |

| grpc-voice-activity-events | Boolean | Specifies whether to enable voice activity events. Available since GSR 1.28.0. |

| grpc-speech-start-timeout | Time interval [msec] | Specifies duration in msec to timeout the stream if no speech begins. Available since GSR 1.28.0. |

| grpc-speech-end-timeout | Time interval [msec] | Specifies duration in msec to timeout the stream after speech ends. Available since GSR 1.28.0. |

| project-id | String | Specifies the project id used in the resource name of the recognizer. This parameter is observed only when the api is set to v2. Available since GSR 1.29.0. |

| recognizer-id | String | Specifies the recognizer id used in the resource name of the recognizer. This parameter is observed only when the api is set to v2. Available since GSR 1.29.0. |

| recognizer-name | String | Specifies the full recognizer name. This parameter is observed only when the api is set to v2. Available since GSR 1.29.0. |

| grpc-keepalive-time | Time interval [msec] | Specifies the interval in milliseconds between PING frames. Defaults to 0 (disabled). Available since GSR 1.30.0. |

| grpc-keepalive-timeout | Time interval [msec] | Specifies the timeout in milliseconds for a PING frame to be acknowledged. Defaults to 0 (disabled). Available since GSR 1.30.0. |

| grpc-keepalive-without-calls | Time interval [msec] | Specifies whether to send keepalive pings from the client without any outstanding streams. Defaults to false (disabled). Available since GSR 1.30.0. |

Parent

<umsgsr>

Children

- None.

Example

This is an example of streaming recognition element.

<streaming-recognition

interim-results="true"

start-of-input="service-originated"

language="en-US"

max-alternatives="1"

alternatives-below-threshold="false"

confidence-format="auto"

single-utterance="true"

results-indent="2"

skip-unsupported-grammars="true"

transcription-grammar="transcribe"

profanity-filter="false"

word-time-offsets="false"

auto-punctuation="false"

use-enhanced="false"

/>

¶ 3.3 Speech Contexts

This element specifies a list of speech contexts.

Availability

>= GSR 1.1.0.

Attributes

- None.

Parent

<umsgsr>

Children

<speech-context>

Example

The example below defines two speech contexts booking and directory.

<speech-contexts>

<speech-context id="booking" enable="true">

<phrase>I would like to book a flight from New York to Rome with a ticket eligible for free cancellation</phrase>

<phrase>I would like to book a one-way flight from New York to Rome</phrase>

</speech-context>

<speech-context id="directory" enable="true">

<phrase>call Steve</phrase>

<phrase>call John</phrase>

<phrase>dial 5</phrase>

<phrase>dial 6</phrase>

</speech-context>

</speech-contexts>

¶ 3.4 Speech Context

This element specifies a speech context.

Availability

>= GSR 1.1.0.

Attributes

| Name | Unit | Description |

|---|---|---|

| id | String | Specifies a unique string identifier of the speech context to be referenced by the MRCP client. |

| enable | Boolean | Specifies whether the speech context is enabled or disabled. |

| speech-complete | Boolean | Specifies whether to complete input as soon as an interim result matches one of the specified phrases. Available since GSR 1.9.0. |

| language | String | The language the phrases are defined for. Available since GSR 1.10.0. |

| scope | String | Specifies a scope of the speech context, which can be set to either hint or strict. Available since GSR 1.12.0. |

Parent

<speech-contexts>

Children

<phrase>

Example

This is an example of speech context element.

<speech-context id="directory" enable="true">

<phrase>call Steve</phrase>

<phrase>call John</phrase>

<phrase>dial 5</phrase>

<phrase>dial 6</phrase>

</speech-context>

¶ 3.5 Phrase

This element specifies a phrase in the speech context.

¶ Availability

>= GSR 1.1.0.

Attributes

| Name | Unit | Description |

|---|---|---|

| tag | String | Specifies an optional arbitrary string identifier to be returned as an instance in the NLSML result, if the transcription result matches the phrase. Available since GSR 1.9.0. |

Parent

<speech-context>

Children

- None.

This is an example of a speech context with phrases having tags specified. Available since GSR 1.9.0.

<speech-context id="boolean" speech-complete="true" scope="strict" enable="true">

<phrase tag="true">yes</phrase>

<phrase tag="true">sure</phrase>

<phrase tag="true">correct</phrase>

<phrase tag="false">no</phrase>

<phrase tag="false">not sure</phrase>

<phrase tag="false">incorrect </phrase>

</speech-context>

This is an example of a speech context with a phrase having set to the class token $TIME. Available since GSR 1.17.0.

<speech-context id="time" enable="true">

<phrase>$TIME</phrase>

</speech-context>

¶ 3.6 Speech and DTMF Input Detector

This element specifies parameters of the speech and DTMF input detector.

Attributes

| Name | Unit | Description |

|---|---|---|

| vad-mode | Integer | Specifies an operating mode of VAD in the range of [0 ... 3]. Default is 1. Available since GSR 1.1.0. |

| speech-start-timeout | Time interval [msec] | Specifies how long to wait in transition mode before triggering a start of speech input event. |

| speech-complete-timeout | Time interval [msec] | Specifies how long to wait in transition mode before triggering an end of speech input event. The complete timeout is used when there is an interim result available. |

| speech-incomplete-timeout | Time interval [msec] | Specifies how long to wait in transition mode before triggering an end of speech input event. The incomplete timeout is used as long as there is no interim result available. Afterwards, the complete timeout is used. Available since GSR 1.4.0. |

| noinput-timeout | Time interval [msec] | Specifies how long to wait before triggering a no-input event. |

| input-timeout | Time interval [msec] | Specifies how long to wait for input to complete. |

| dtmf-interdigit-timeout | Time interval [msec] | Specifies a DTMF inter-digit timeout. |

| dtmf-term-timeout | Time interval [msec] | Specifies a DTMF input termination timeout. |

| dtmf-term-char | Character | Specifies a DTMF input termination character. |

| speech-leading-silence | Time interval [msec] | Specifies desired silence interval preceding spoken input. |

| speech-trailing-silence | Time interval [msec] | Specifies desired silence interval following spoken input. |

| speech-output-period | Time interval [msec] | Specifies an interval used to send speech frames to the recognizer. |

Parent

<umsgsr>

Children

- None.

Example

The example below defines a typical speech and DTMF input detector having the default parameters set.

<speech-dtmf-input-detector

vad-mode="2"

speech-start-timeout="300"

speech-complete-timeout="1000"

speech-incomplete-timeout="3000"

noinput-timeout="5000"

input-timeout="10000"

dtmf-interdigit-timeout="5000"

dtmf-term-timeout="10000"

dtmf-term-char=""

speech-leading-silence="300"

speech-trailing-silence="300"

speech-output-period="200"

/>

¶ 3.7 Utterance Manager

This element specifies parameters of the utterance manager.

Availability

>= GSR 1.3.0.

Attributes

| Name | Unit | Description |

|---|---|---|

| save-waveforms | Boolean | Specifies whether to save waveforms or not. |

| purge-existing | Boolean | Specifies whether to delete existing records on start-up. |

| max-file-age | Time interval [min] | Specifies a time interval in minutes after expiration of which a waveform is deleted. Set 0 for infinite. |

| max-file-count | Integer | Specifies the max number of waveforms to store. If reached, the oldest waveform is deleted. Set 0 for infinite. |

| waveform-base-uri | String | Specifies the base URI used to compose an absolute waveform URI. |

| waveform-folder | Dir path | Specifies a folder the waveforms should be stored in. |

| file-prefix | String | Specifies a prefix used to compose the name of the file to be stored. Defaults to 'umsgsr-', if not specified. |

| use-logging-tag | Boolean | Specifies whether to use the MRCP header field Logging-Tag, if present, to compose the name of the file to be stored. Available since GSR 1.15.0. |

Parent

<umsgsr>

Children

- None.

Example

The example below defines a typical utterance manager having the default parameters set.

<utterance-manager

save-waveforms="false"

purge-existing="false"

max-file-age="60"

max-file-count="100"

waveform-base-uri="http://localhost/utterances/"

waveform-folder=""

/>

¶ 3.8 RDR Manager

This element specifies parameters of the Recognition Details Record (RDR) manager.

Availability

>= GSR 1.3.0.

Attributes

| Name | Unit | Description |

|---|---|---|

| save-records | Boolean | Specifies whether to save recognition details records or not. |

| purge-existing | Boolean | Specifies whether to delete existing records on start-up. |

| max-file-age | Time interval [min] | Specifies a time interval in minutes after expiration of which a record is deleted. Set 0 for infinite. |

| max-file-count | Integer | Specifies the max number of records to store. If reached, the oldest record is deleted. Set 0 for infinite. |

| record-folder | Dir path | Specifies a folder to store recognition details records in. Defaults to ${UniMRCPInstallDir}/var. |

| file-prefix | String | Specifies a prefix used to compose the name of the file to be stored. Defaults to 'umsgsr-', if not specified. |

| use-logging-tag | Boolean | Specifies whether to use the MRCP header field Logging-Tag, if present, to compose the name of the file to be stored. Available since GSR 1.15.0. |

Parent

<umsgsr>

Children

- None.

Example

The example below defines a typical utterance manager having the default parameters set.

<rdr-manager

save-records="false"

purge-existing="false"

max-file-age="60"

max-file-count="100"

waveform-folder=""

/>

¶ 3.9 Monitoring Agent

This element specifies parameters of the monitoring agent.

Availability

>= GSR 1.3.0.

Attributes

| Name | Unit | Description |

|---|---|---|

| refresh-period | Time interval [sec] | Specifies a time interval in seconds used to periodically refresh usage details. See <usage-refresh-handler>. |

Parent

<umsgsr>

Children

<usage-change-handler><usage-refresh-handler>

Example

The example below defines a monitoring agent with usage change and refresh handlers.

<monitoring-agent refresh-period="60">

<usage-change-handler>

<log-usage enable="true" priority="NOTICE"/>

</usage-change-handler>

<usage-refresh-handler>

<dump-channels enable="true" status-file="umsgsr-channels.status"/>

</usage-refresh-handler >

</monitoring-agent>

¶ 3.10 Usage Change Handler

This element specifies an event handler called on every usage change.

Availability

>= GSR 1.3.0.

Attributes

- None.

Parent

<monitoring-agent>

Children

<log-usage><update-usage><dump-channels>

Example

This is an example of the usage change event handler.

<usage-change-handler>

<log-usage enable="true" priority="NOTICE"/>

<update-usage enable="false" status-file="umsgsr-usage.status"/>

<dump-channels enable="false" status-file="umsgsr-channels.status"/>

</usage-change-handler>

¶ 3.11 Usage Refresh Handler

This element specifies an event handler called periodically to update usage details.

Availability

>= GSR 1.3.0.

Attributes

- None.

Parent

<monitoring-agent>

Children

<log-usage><update-usage><dump-channels>

Example

This is an example of the usage change event handler.

<usage-refresh-handler>

<log-usage enable="true" priority="NOTICE"/>

<update-usage enable="false" status-file="umsgsr-usage.status"/>

<dump-channels enable="false" status-file="umsgsr-channels.status"/>

</usage-refresh-handler>

¶ 3.12 License Server

This element specifies parameters used to connect to the license server.

Availability

>= GSR 1.2.0.

Attributes

| Name | Unit | Description |

|---|---|---|

| enable | Boolean | Specifies whether the use of license server is enabled or not. If enabled, the license-file attribute is not honored. |

| server-address | String | Specifies the IP address or host name of the license server. |

| certificate-file | File path | Specifies the client certificate used to connect to the license server. File name may include patterns containing a * sign. If multiple files match the pattern, the most recent one gets used. |

| ca-file | File path | Specifies the certificate authority used to validate the license server. |

| channel-count | Integer | Specifies the number of channels to check out from the license server. If not specified or set to 0, either all available channels or a pool of channels will be checked based on the configuration of the license server. With dynamic distribution of licenses available in v3 api, this parameter indicates initial number of channels. |

| max-channel-count | Integer | Specifies the upper bound of channels which can be allocated to this instance by the license server. If not specified, no upper bound is used. This parameter is observed only when api is set to v3 and distrib-type is not static. Available since GSR 1.27.0. |

| min-channel-count | Integer | Specifies the lower bound of channels which can be allocated to this instance by the license server. If not specified, no lower bound is used. This parameter is observed only when api is set to v3 and distrib-type is not static. Available since GSR 1.27.0. |

| expand-channel-count | Integer | Specifies the expanding window shall additional channels be required. This parameter is observed only when api is set to v3 and distrib-type is not static. If not specified, then the parameter is implicitly set to 10% of the total licenses by the license server. Available since GSR 1.27.0. |

| shrink-channel-count | Integer | Specifies the shrinking window shall excessive channels be available to release back to the license server. This parameter is observed only when api is set to v3 and distrib-type is not static. If not specified, then the parameter is implicitly set to 20% of the total licenses by the license server. Available since GSR 1.27.0. |

| distrib-type | String | Specifies the license distribution type. One of "dynamic" or "static". This parameter is observed only when api is set to v3. If not specified, then the parameter defaults to dynamic with v3. Static distribution is always used with v2. Available since GSR 1.27.0. |

| http-proxy-address | String | Specifies the IP address or host name of the HTTP proxy server, if used. Available since GSR 1.15.0. |

| http-proxy-port | Integer | Specifies the port number of the HTTP proxy server, if used. Available since GSR 1.15.0. |

| security-level | Integer | Specifies the SSL security level, which defaults to 1. Applicable since OpenSSL 1.1.0. Available since GSR 1.23.0. |

| api | String | Specifies the API version. One of v2 or v3. Defaults to v2. Do not use v3 unless you are instructed the version of the license server you use supports v3. Available since GSR 1.27.0. |

| connect-cycles | String | Specifies 3-tiered cycles of attempts and timeouts used to reconnect to the license server shall the connection is lost. For example, "{3,5},{60,5},{3600,24}" will stand for 5 attempts every 3 seconds, then 5 attempts every minute, then 24 attempts every hour. If the connection cannot be established after the final cycle, the service will be declined. |

Parent

<umsgsr>

Children

- None.

Example

The example below defines a typical configuration which can be used to connect to a license server located, for example, at 10.0.0.1.

<license-server

enable="true"

server-address="10.0.0.1"

certificate-file="unilic_client_*.crt"

ca-file="unilic_ca.crt"

/>

¶ 3.13 Webhook

This element specifies parameters of the webhook service.

Availability

>= GSR 1.22.0.

Attributes

| Name | Unit | Description |

|---|---|---|

| enable | Boolean | Specifies whether to enable the webhook service agent. |

| service-uri | String | Specifies the gRPC endpoint of the webhook service. |

| secure-connection | Boolean | Specifies whether to use an encrypted TLS or an insecure TCP connection to the service. |

| auth-token | String | Specifies the auth token used in the requests sent to the webhook service. |

| deadline | Time interval [msec] | Specifies the gRPC call deadline. The deadline is specified in ms and defaults to 0 (disabled). |

Parent

<umsgsr>

Children

- None.

Example

The example below defines a configuration of the webhook service located, for example, at 10.0.0.1.

<webhook

enable="true"

service-uri="10.0.0.1:50051"

secure-connection="false"

auth-token=""

deadline="0"

/>

¶ 4 Configuration Steps

This section outlines common configuration steps.

¶ 4.1 Using Default Configuration

The default configuration should be sufficient for the general use.

¶ 4.2 Specifying Recognition Language

Recognition language can be specified by the client per MRCP session by means of the header field Speech-Language set in a SET-PARAMS or RECOGNIZE request. Otherwise, the parameter language set in the configuration file umsgsr.xml is used. The parameter defaults to en-US.

For supported languages and their corresponding codes, visit the following link.

Since GSR 1.11.0, the recognition language can also be set by the attribute xml:lang specified in the SRGS grammar.

<?xml version="1.0" encoding="UTF-8"?>

<grammar mode="voice" root="transcribe" version="1.0"

xml:lang="en-AU"

xmlns="http://www.w3.org/2001/06/grammar">

<meta name="scope" content="builtin"/>

<rule id="transcribe"><one-of/></rule>

</grammar>

Since GSR 1.12.0, the recognition language can also be set by the optional parameter language passed to a built-in grammar.

builtin:speech/transcribe?language=en-AU

¶ 4.3 Specifying Sampling Rate

Sampling rate is determined based on the SDP negotiation. Refer to the configuration guide of the UniMRCP server on how to specify supported encodings and sampling rates to be used in communication between the client and server.

The native sampling rate with the linear16 audio encoding is used in gRPC streaming to the Google Cloud Speech service.

¶ 4.4 Specifying Speech Input Parameters

While the default parameters specified for the speech input detector are sufficient for the general use, various parameters can be adjusted to better suit a particular requirement.

- speech-start-timeout

This parameter is used to trigger a start of speech input. The shorter is the timeout, the sooner a START-OF-INPUT event is delivered to the client. However, a short timeout may also lead to a false positive.

- speech-complete-timeout

This parameter is used to trigger an end of speech input. The shorter is the timeout, the shorter is the response time. However, a short timeout may also lead to a false positive.

Note that both events, an expiration of the speech complete timeout and an END-OF-SINGLE-UTTERANCE response delivered from the Google Cloud Speech service, are monitored to trigger an end of speech input, on whichever comes first basis. In order to rely solely on an event delivered from the speech service, the parameter speech-complete-timeout needs to be set to a higher value.

- vad-mode

This parameter is used to specify an operating mode of the Voice Activity Detector (VAD) within an integer range of [0 … 3]. A higher mode is more aggressive and, as a result, is more restrictive in reporting speech. The parameter can be overridden per MRCP session by setting the header field Sensitivity-Level in a SET-PARAMS or RECOGNIZE request. The following table shows how the Sensitivity-Level is mapped to the vad-mode.

| Sensitivity-Level | Vad-Mode |

|---|---|

| [0.00 ... 0.25) | 0 |

| [0.25 … 0.50) | 1 |

| [0.50 ... 0.75) | 2 |

| [0.75 ... 1.00] | 3 |

¶ 4.5 Specifying DTMF Input Parameters

While the default parameters specified for the DTMF input detector are sufficient for the general use, various parameters can be adjusted to better suit a particular requirement.

- dtmf-interdigit-timeout

This parameter is used to set an inter-digit timeout on DTMF input. The parameter can be overridden per MRCP session by setting the header field DTMF-Interdigit-Timeout in a SET-PARAMS or RECOGNIZE request.

- dtmf-term-timeout

This parameter is used to set a termination timeout on DTMF input and is in effect when dtmf-term-char is set and there is a match for an input grammar. The parameter can be overridden per MRCP session by setting the header field DTMF-Term-Timeout in a SET-PARAMS or RECOGNIZE request.

- dtmf-term-char

This parameter is used to set a character terminating DTMF input. The parameter can be overridden per MRCP session by setting the header field DTMF-Term-Char in a SET-PARAMS or RECOGNIZE request.

¶ 4.6 Specifying No-Input and Recognition Timeouts

- noinput-timeout

This parameter is used to trigger a no-input event. The parameter can be overridden per MRCP session by setting the header field No-Input-Timeout in a SET-PARAMS or RECOGNIZE request.

- input-timeout

This parameter is used to limit input (recognition) time. The parameter can be overridden per MRCP session by setting the header field Recognition-Timeout in a SET-PARAMS or RECOGNIZE request.

¶ 4.7 Specifying Speech Recognition Mode

Single Utterance Mode

By default, if the configuration parameter single-utterance is set to true, recognition is performed in the single utterance mode and is terminated upon an expiration of the speech complete timeout or an END-OF-SINGLE-UTTERANCE response delivered from the Google Cloud Speech service.

Continuous Recognition Mode

In the continuous speech recognition mode, when the configuration parameter single-utterance is set to false, recognition is terminated upon an expiration of the speech complete timeout, which is recommended to be set in the range of 1500 msec to 3000 msec. The Google Cloud Speech service may return multiple results (sub utterances), which are concatenated and sent back to the MRCP client in a single RECOGNITION-COMPLETE event.

The parameter single-utterance can be overridden per MRCP session by setting the header field Vendor-Specific-Parameters in a SET-PARAMS or RECOGNIZE request, where the parameter name is single-utterance and acceptable values are true and false.

¶ 4.8 Specifying Vendor-Specific Parameters

The following parameters can optionally be specified by the MRCP client in SET-PARAMS, DEFINE-GRAMMAR and RECOGNIZE requests via the MRCP header field Vendor-Specific-Parameters.

| Name | Unit | Description |

|---|---|---|

| start-of-input | String | Specifies the source of start of input event sent to the client (use "service-originated" for an event originated based on a first-received interim result and "internal" for an event determined by plugin). Available since GSR 1.6.0. |

| alternatives-below-threshold | Boolean | Specifies whether to return speech recognition result alternatives with the confidence score below the confidence threshold. Available since GSR 1.9.0. |

| single-utterance | Boolean | Specifies whether to detect a single spoken utterance or perform continuous recognition. Available since GSR 1.4.0. |

| profanity-filter | Boolean | Specifies whether to attempt to filter out profanities. Available since GSR 1.14.0. |

| word-time-offsets | Boolean | Specifies whether to return word-level time offset information. Available since GSR 1.14.0. |

| auto-punctuation | Boolean | Specifies whether to enable automatic punctuation. Available since GSR 1.14.0. |

| use-enhanced | Boolean | Specifies whether to use enhanced model for speech recognition. Available since GSR 1.14.0. |

| model | String | Specifies the domain-specific model, if used. Find available values at https://cloud.google.com/speech-to-text/docs/reference/rpc/google.cloud.speech.v1#recognitionconfig. Available since GSR 1.14.0. |

| speech-start-timeout | Time interval [msec] | Specifies how long to wait in transition mode before triggering a start of speech input event. Available since GSR 1.15.0. |

| skip-empty-results | Boolean | Specifies whether to implicitly initiate a new gRPC request if the current one completes with an empty result. Available since GSR 1.16.0. |

| interim-result-timeout | Time interval [msec] | Specifies a timeout between interim results containing transcribed speech. If the timeout is elapsed, input is considered complete. Available since GSR 1.16.0. |

| speaker-diarization | Boolean | Specifies whether to enable speaker diarization. Available since GSR 1.18.0. |

| min-speaker-count | Integer | Specifies the minimum number of speakers in the conversation. Available since GSR 1.18.0. |

| max-speaker-count | Integer | Specifies the maximum number of speakers in the conversation. Available since GSR 1.18.0. |

| logging-tag | String | Specifies the logging tag. Available since GSR 1.18.0. |

| tag-format | String | Specifies the format of the instance element to be returned. Available since GSR 1.19.0. |

| api | String | Specifies the Speech-to-Text API. Use one of:

|

| service-endpoint | String | Specifies the service endpoint. Available since GSR 1.20.0. |

| region | String | Specifies the region. The global endpoint is used if the region is not specified. Available since GSR 1.20.0. |

| gapp-credentials-file | File path | Specifies the Google Application Credentials file to use. File name may include patterns containing * sign. If multiple files match the pattern, the most recent one gets used. Available since GSR 1.20.0. |

| adaptation | String | Specifies a JSON representation of the SpeechAdaptation structure. Available since GSR 1.21.0 in v1p1beta1 and GSR 1.25.0 in v1. |

| transcript-normalization | String | Specifies a JSON representation of the TranscriptNormalization structure. Available since GSR 1.25.0 in v1p1beta1 only. |

| save-waveform | Boolean | Specifies whether to save the original utterance. Available since GSR 1.24.0. |

| save-rdr | Boolean | Specifies whether to save the Recognition Details Record. Available since GSR 1.24.0. |

| project-id | String | Specifies the project id used in the resource name of the recognizer. This parameter is observed only when the api is set to v2. Available since GSR 1.29.0. |

| recognizer-id | String | Specifies the recognizer id used in the resource name of the recognizer. This parameter is observed only when the api is set to v2. Available since GSR 1.29.0. |

| recognizer-name | String | Specifies the full recognizer name. This parameter is observed only when the api is set to v2. Available since GSR 1.29.0. |

All the vendor-specific parameters can also be specified at the grammar-level via a built-in or SRGS XML grammar.

The following example demonstrates the use of a built-in grammar with the vendor-specific parameters alternatives-below-threshold and speech-start-timeout set to true and 100 correspondingly.

builtin:speech/transcribe?alternatives-below-threshold=true;speech-start-timeout=100

The following example demonstrates the use of an SRGS XML grammar with the vendor-specific parameters alternatives-below-threshold and speech-start-timeout set to true and 100 correspondingly.

<grammar mode="voice" root="transcribe" version="1.0" xml:lang="en-US" xmlns="http://www.w3.org/2001/06/grammar">

<meta name="scope" content="builtin"/>

<meta name="alternatives-below-threshold" content="true"/>

<meta name="speech-start-timeout" content="100"/>

<rule id="transcribe">

<one-of ><item>blank</item></one-of>

</rule>

</grammar>

¶ 4.9 Using v2 API

To use the Google Speech-to-Text API, the api parameter must be set to v2 and an existing recognizer resource must be referenced by either the resource-name parameter, or composite the resource-id, project-id, region parameters. The parameters can be set globally in the configuration file umsgsr.xml or overriden per the first request in an MRCP session. For example:

builtin:speech/transcribe?api=v2;recognizer-id=your-recognizer-id;project-id=your-project-id

¶ 4.10 Maintaining Utterances

Saving of utterances is not required for regular operation and is disabled by default. However, enabling this functionality allows to save utterances sent to the Google Cloud Speech service and later listen to them offline.

The relevant settings can be specified via the element utterance-manager.

- save-waveforms

Utterances can optionally be recorded and stored if the configuration parameter save-waveforms is set to true. The parameter can be overridden per MRCP session by setting the header field Save-Waveforms in a SET-PARAMS or RECOGNIZE request.

- purge-existing

This parameter specifies whether to delete existing waveforms on start-up.

- max-file-age

This parameter specifies a time interval in minutes after expiration of which a waveform is deleted. If set to 0, there is no expiration time specified.

- max-file-count

This parameter specifies the maximum number of waveforms to store. If the specified number is reached, the oldest waveform is deleted. If set to 0, there is no limit specified.

- waveform-base-uri

This parameter specifies the base URI used to compose an absolute waveform URI returned in the header field Waveform-Uri in response to a RECOGNIZE request.

- waveform-folder

This parameter specifies a path to the directory used to store waveforms in. The directory defaults to ${UniMRCPInstallDir}/var.

¶ 4.11 Maintaining Recognition Details Records

Producing of recognition details records (RDR) is not required for regular operation and is disabled by default. However, enabling this functionality allows to store details of each recognition attempt in a separate file and analyze them later offline. The RDRs ate stored in the JSON format.

The relevant settings can be specified via the element rdr-manager.

- save-records

This parameter specifies whether to save recognition details records or not.

- purge-existing

This parameter specifies whether to delete existing records on start-up.

- max-file-age

This parameter specifies a time interval in minutes after expiration of which a record is deleted. If set to 0, there is no expiration time specified.

- max-file-count

This parameter specifies the maximum number of records to store. If the specified number is reached, the oldest record is deleted. If set to 0, there is no limit specified.

- record-folder

This parameter specifies a path to the directory used to store records in. The directory defaults to ${UniMRCPInstallDir}/var.

¶ 5 Recognition Grammars and Results

¶ 5.1 Using Built-in Speech Contexts

Pre-set built-in speech contexts can be referenced by the MRCP client in a RECOGNIZE request as follows:

builtin:speech/$id

Where $id is a unique string identifier of built-in speech context.

Speech contexts are defined in the configuration file umsgsr.xml. A speech context is assigned a unique string identifier and holds a list of phrases which can optionally be passed to the Google Cloud Speech service to improve the recognition accuracy.

Below is a definition of a sample speech context directory:

<speech-context id="directory">

<phrase>call Steve</phrase>

<phrase>call John</phrase>

<phrase>dial 5</phrase>

<phrase>dial 6</phrase>

</speech-context>

Which can be referenced in a RECOGNIZE request as follows:

builtin:speech/directory

Since GSR 1.8.0, the prefixes builtin:speech and builtin:grammar can be used interchangeably as follows:

builtin:grammar/directory

For generic speech transcription, having no speech contexts defined, a pre-set identifier transcribe must be used.

builtin:speech/transcribe

The name of the identifier transcribe can be changed from the configuration file umsgsr.xml, since GSR 1.8.0.

Since GSR 1.11.0, a speech context can be referenced by means metadata in SRGS XML grammar. For example, the following SRGS grammar references a built-in speech context directory.

<grammar mode="voice" root="directory" version="1.0"

xml:lang="en-US"

xmlns="http://www.w3.org/2001/06/grammar">

<meta name="scope" content="builtin"/>

<rule id="directory"><one-of/></rule>

</grammar>

Where the root rule name identifies a speech context.

¶ 5.2 Using Dynamic Speech Contexts

The MRCP client can also dynamically specify a speech context either

-

in a DEFINE-GRAMMAR request by further referencing the defined speech context in a RECOGNIZE request using the session URI scheme

-

or inline in a RECOGNIZE request

While composing a DEFINE-GRAMMAR or RECOGNIZE request containing speech context definition, the following should be considered.

-

The value of the header field Content-Id must be used as a unique string identifier of the speech context being defined.

-

The value of the header field Content-Type must be set to application/xml.

-

The message body must contain a definition of the speech context, composed based on the XML format of the element <speech-context>, specified in the configuration file umsgsr.xml. Note that the unique identifier of the speech context is set based on the header field Content-Id, as opposed to the attribute Id when loading from configuration.

Since GSR 1.11.0, a dynamic speech context can be specified by means of the <one-of> construct in SRGS XML grammar. For example:

<grammar mode="voice" root="booking" version="1.0"

xml:lang="en-US"

xmlns="http://www.w3.org/2001/06/grammar">

<meta name="scope" content="hint"/>

<rule id="booking">

<one-of>

<item> I would like to book a flight from New York to Rome with a ticket eligible for free cancellation</item>

<item> I would like to book a one-way flight from New York to Rome</item>

<one-of>

</rule>

</grammar>

¶ 5.3 Using Built-in DTMF Grammars

Pre-set built-in DTMF grammars can be referenced by the MRCP client in a RECOGNIZE request as follows:

builtin:dtmf/$id

Where $id is a unique string identifier of the built-in DTMF grammar. For example:

builtin:dtmf/digits

Note that only a DTMF grammar identifier digits is currently supported.

Since GSR 1.11.0, built-in DTMF digits can also be referenced by metadata in SRGS XML grammar. The following example is equivalent to the built-in grammar above.

<grammar mode="dtmf" root="digits" version="1.0"

xml:lang="en-US"

xmlns="http://www.w3.org/2001/06/grammar">

<meta name="scope" content="builtin"/>

<rule id="digits"><one-of/></rule>

</grammar>

Where the root rule name identifies a built-in DTMF grammar.

¶ 5.4 Retrieving Results

Results received from the Google Cloud Speech service are transformed to the NLSML format with no semantic interpretation performed and sent to the MRCP client in a RECOGNITION-COMPLETE event.

Since GSR 1.11.0, limited support for semantic interpretation is added to processing of SRGS XML grammars. Only <one-of> construct and literal tags are currently supported.

¶ 6 Monitoring Usage Details

The number of in-use and total licensed channels can be monitored in several alternate ways. There is a set of actions which can take place on certain events. The behavior is configurable via the element monitoring-agent, which contains two event handlers: usage-change-handler and usage-refresh-handler.

While the usage-change-handler is invoked on every acquisition and release of a licensed channel, the usage-refresh-handler is invoked periodically on expiration of a timeout specified by the attribute refresh-period.

The following actions can be specified for either of the two handlers.

¶ 6.1 Log Usage

The action log-usage logs the following data in the order specified.

-

The number of currently in-use channels.

-

The maximum number of channels used concurrently. Available since GSR 1.9.0.

-

The total number of licensed channels.

The following is a sample log statement, indicating 0 in-use, 0 max-used and 2 total channels.

[NOTICE] GSR Usage: 0/0/2

¶ 6.2 Update Usage

The action update-usage writes the following data to a status file umsgsr-usage.status, located by default in the directory ${UniMRCPInstallDir}/var/status.

- The number of currently in-use channels.

- The maximum number of channels used concurrently. Available since GSR 1.9.0.

- The total number of licensed channels.

- The current status of the license permit.

- The license server alarm. Set to on, if the license server is not available for more than one hour; otherwise, set to off. This parameter is maintained only if the license server is used. Available since GSR 1.12.0.

The following is a sample content of the status file.

in-use channels: 0

max used channels: 0

total channels: 2

license permit: true

licserver alarm: off

¶ 6.3 Dump Channels

The action dump-channels writes the identifiers of in-use channels to a status file umsgsr-channels.status, located by default in the directory ${UniMRCPInstallDir}/var/status.

¶ 7 Usage Examples

¶ 7.1 Speech Recognition without Speech Context

This example demonstrates how to perform speech recognition by using a RECOGNIZE request, having no speech contexts defined.

C->S:

MRCP/2.0 336 RECOGNIZE 1

Channel-Identifier: 6e1a2e4e54ae11e7@speechrecog

Content-Id: request1@form-level

Content-Type: text/uri-list

Cancel-If-Queue: false

No-Input-Timeout: 5000

Recognition-Timeout: 10000

Start-Input-Timers: true

Confidence-Threshold: 0.87

Save-Waveform: true

Content-Length: 25

builtin:speech/transcribe

S->C:

MRCP/2.0 83 1 200 IN-PROGRESS

Channel-Identifier: 6e1a2e4e54ae11e7@speechrecog

S->C:

MRCP/2.0 115 START-OF-INPUT 1 IN-PROGRESS

Channel-Identifier: 6e1a2e4e54ae11e7@speechrecog

Input-Type: speech

S->C:

MRCP/2.0 498 RECOGNITION-COMPLETE 1 COMPLETE

Channel-Identifier: 6e1a2e4e54ae11e7@speechrecog

Completion-Cause: 000 success

Waveform-Uri: <http://localhost/utterances/utter-6e1a2e4e54ae11e7-1.wav>;size=20480;duration=1280

Content-Type: application/x-nlsml

Content-Length: 214

<?xml version="1.0"?>

<result>

<interpretation grammar="builtin:speech/transcribe" confidence="0.88">

<instance>call Steve</instance>

<input mode="speech">call Steve</input>

</interpretation>

</result>

¶ 7.2 Speech Recognition with Built-in Speech Context

This example demonstrates how to perform speech recognition by using a RECOGNIZE request to reference a pre-set built-in speech context directory on the server.

C->S:

MRCP/2.0 335 RECOGNIZE 1

Channel-Identifier: 3ea18b9854af11e7@speechrecog

Content-Id: request1@form-level

Content-Type: text/uri-list

Cancel-If-Queue: false

No-Input-Timeout: 5000

Recognition-Timeout: 10000

Start-Input-Timers: true

Confidence-Threshold: 0.87

Save-Waveform: true

Content-Length: 24

builtin:speech/directory

S->C:

MRCP/2.0 83 1 200 IN-PROGRESS

Channel-Identifier: 3ea18b9854af11e7@speechrecog

S->C:

MRCP/2.0 115 START-OF-INPUT 1 IN-PROGRESS

Channel-Identifier: 3ea18b9854af11e7@speechrecog

Input-Type: speech

S->C:

MRCP/2.0 497 RECOGNITION-COMPLETE 1 COMPLETE

Channel-Identifier: 3ea18b9854af11e7@speechrecog

Completion-Cause: 000 success

Waveform-Uri: <http://localhost/utterances/utter-3ea18b9854af11e7-1.wav>;size=20480;duration=1280

Content-Type: application/x-nlsml

Content-Length: 213

<?xml version="1.0"?>

<result>

<interpretation grammar="builtin:speech/directory" confidence="0.88">

<instance>call Steve</instance>

<input mode="speech">call Steve</input>

</interpretation>

</result>

¶ 7.3 Speech Recognition with Dynamic Speech Context

This example demonstrates how to perform speech recognition, by using a DEFINE-GRAMMAR request to specify a speech context and further reference the defined speech context in a RECOGNIZE request.

C->S:

MRCP/2.0 314 DEFINE-GRAMMAR 1

Channel-Identifier: 25902c3a54b011e7@speechrecog

Content-Type: application/xml

Content-Id: request1@form-level

Content-Length: 146

<speech-context>

<phrase>call Steve</phrase>

<phrase>call John</phrase>

<phrase>dial 5</phrase>

<phrase>dial 6</phrase>

</speech-context>

S->C:

MRCP/2.0 112 1 200 COMPLETE

Channel-Identifier: 25902c3a54b011e7@speechrecog

Completion-Cause: 000 success

C->S:

MRCP/2.0 305 RECOGNIZE 2

Channel-Identifier: 25902c3a54b011e7@speechrecog

Content-Type: text/uri-list

Cancel-If-Queue: false

No-Input-Timeout: 5000

Recognition-Timeout: 10000

Start-Input-Timers: true

Confidence-Threshold: 0.87

Save-Waveform: true

Content-Length: 27

session:request1@form-level

S->C:

MRCP/2.0 83 2 200 IN-PROGRESS

Channel-Identifier: 25902c3a54b011e7@speechrecog

S->C:

MRCP/2.0 115 START-OF-INPUT 2 IN-PROGRESS

Channel-Identifier: 25902c3a54b011e7@speechrecog

Input-Type: speech

S->C:

MRCP/2.0 500 RECOGNITION-COMPLETE 2 COMPLETE

Channel-Identifier: 25902c3a54b011e7@speechrecog

Completion-Cause: 000 success

Waveform-Uri: <http://localhost/utterances/utter-25902c3a54b011e7-2.wav>;size=20480;duration=1280

Content-Type: application/x-nlsml

Content-Length: 216

<?xml version="1.0"?>

<result>

<interpretation grammar="session:request1@form-level" confidence="0.88">

<instance>call Steve</instance>

<input mode="speech">call Steve</input>

</interpretation>

</result>

¶ 7.4 DTMF Recognition with Built-in Grammar

This example demonstrates how to reference a built-in DTMF grammar in a RECOGNIZE request.

C->S:

MRCP/2.0 266 RECOGNIZE 1

Channel-Identifier: d26bef74091a174c@speechrecog

Content-Type: text/uri-list

Cancel-If-Queue: false

Start-Input-Timers: true

Confidence-Threshold: 0.7

Speech-Language: en-US

Dtmf-Term-Char: #

Content-Length: 19

builtin:dtmf/digits

S->C:

MRCP/2.0 83 1 200 IN-PROGRESS

Channel-Identifier: d26bef74091a174c@speechrecog

S->C:

MRCP/2.0 113 START-OF-INPUT 1 IN-PROGRESS

Channel-Identifier: d26bef74091a174c@speechrecog

Input-Type: dtmf

S->C:

MRCP/2.0 382 RECOGNITION-COMPLETE 1 COMPLETE

Channel-Identifier: d26bef74091a174c@speechrecog

Completion-Cause: 000 success

Content-Type: application/x-nlsml

Content-Length: 197

<?xml version="1.0"?>

<result>

<interpretation grammar="builtin:dtmf/digits" confidence="1.00">

<input mode="dtmf">1 2 3 4</input>

<instance>1234</instance>

</interpretation>

</result>

¶ 7.5 Speech and DTMF Recognition

This example demonstrates how to perform recognition by activating both speech and DTMF grammars. In this example, the user is expected to input a 4-digit pin.

C->S:

MRCP/2.0 275 RECOGNIZE 1

Channel-Identifier: 6ae0f23e1b1e3d42@speechrecog

Content-Type: text/uri-list

Cancel-If-Queue: false

Start-Input-Timers: true

Confidence-Threshold: 0.7

Speech-Language: en-US

Content-Length: 47

builtin:dtmf/digits?length=4

builtin:speech/pin

S->C:

MRCP/2.0 83 2 200 IN-PROGRESS

Channel-Identifier: 6ae0f23e1b1e3d42@speechrecog

S->C:

MRCP/2.0 115 START-OF-INPUT 2 IN-PROGRESS

Channel-Identifier: 6ae0f23e1b1e3d42@speechrecog

Input-Type: speech

S->C:

MRCP/2.0 399 RECOGNITION-COMPLETE 2 COMPLETE

Channel-Identifier: 6ae0f23e1b1e3d42@speechrecog

Completion-Cause: 000 success

Content-Type: application/x-nlsml

Content-Length: 214

<?xml version="1.0"?>

<result>

<interpretation grammar=" builtin:speech/pin" confidence="1.00">

<instance>one two three four</instance>

<input mode="speech">one two three four</input>

</interpretation>

</result>

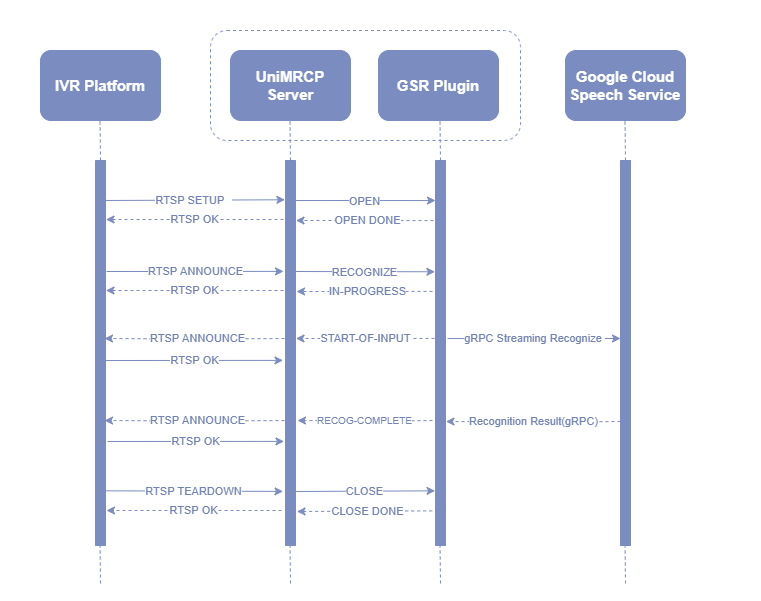

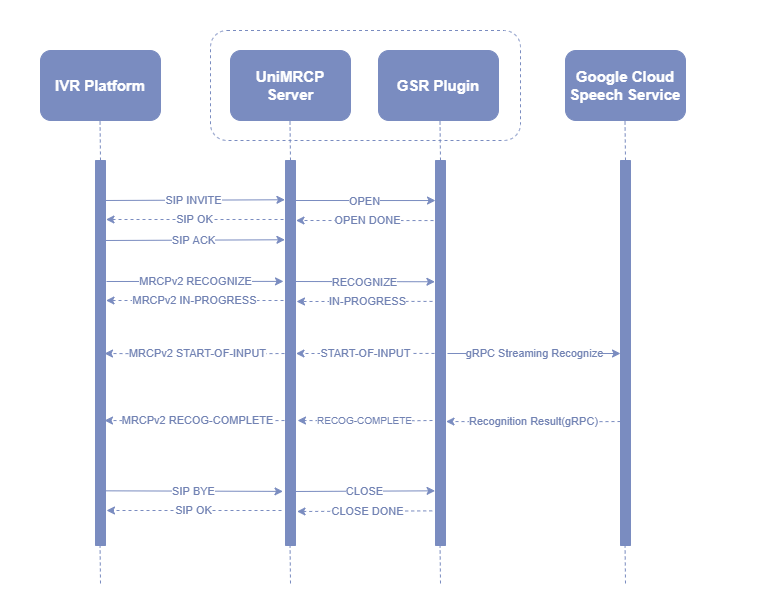

¶ 8 Sequence Diagrams

The following sequence diagrams outline common interactions between all the main components involved in a typical recognition session performed over MRCPv1 and MRCPv2 respectively.

¶ 8.1 MRCPv1

¶ 8.2 MRCPv2

¶ 9 Security Considerations

¶ 9.1 Network Connection

All the data transmitted to and received from the Google Cloud Speech API is carried over a secure TLS v1.2 connection via the gRPC streaming.

It is not even allowed to establish an unsecure connection to any of Google Cloud APIs in general.

¶ 9.2 Network Port

The standard TLS port 443 is used for the gRPC streaming,

RPM Installation Manual Red Hat / CentOS

RPM Installation Manual Red Hat / CentOS Deb Installation Manual Debian / Ubuntu

Deb Installation Manual Debian / Ubuntu